About six months ago, I started dabbling in voice AI and avatar AI – technologies that let you duplicate your voice and visuals without having to record or film anything. The concept is simple but powerful: imagine being able to write out a script and have the system produce a voice and visual that looks and sounds just like you. It sounds incredible, right? And in theory, it could save time for creators, YouTubers, and production companies. But what about for the everyday person? What could this kind of tech be used for, aside from showing your friends how cool it is?

In my previous work, I’d often come across new technologies and test them out in ridiculous ways, usually just for fun. But the real magic came when I could apply them to a real-world business scenario. This meant learning how to embed new tech into a company’s processes in a way that they teams were accepting of and saw real benefit. But when it came to Voice AI, I struggled to find a practical, widespread use case. Yes, it’s cool. But who’s really going to need this tech in their daily lives?

What’s the Use Case?

Sure, media professionals, audiobook narrators, and YouTubers could benefit from this. But beyond that, the use cases felt pretty niche. My best guess was that it might be useful in education. Imagine a tutor recording a voiceover for a tutorial video, then having the AI update that voiceover as the content changes, without needing the tutor to re-record everything. That’s useful, but it still felt limited.

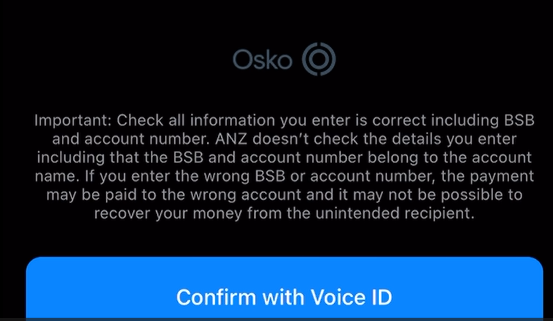

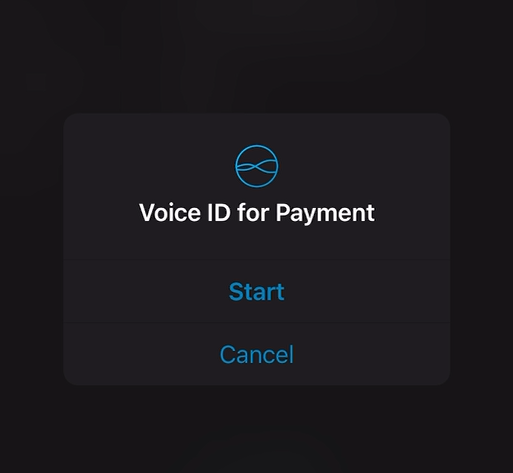

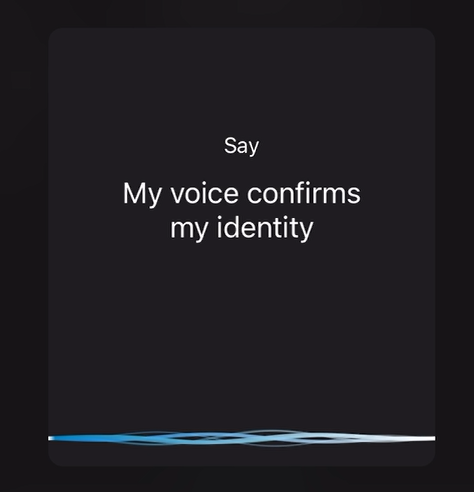

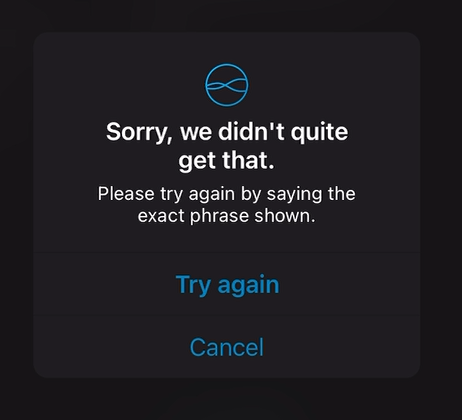

Then, a couple of weeks ago, something clicked. I was making a payment with my bank and had to verify my identity using a voice passphrase. You know the drill – the screen pops up with a sentence, and you repeat it back. The first time, the system didn’t recognise me; the second time, it did. No big deal, right?

Except then it hit me like a Zoom update five minutes before an important meeting: could the latest version of this AI voice tech actually be good enough to fool my bank’s voice authentication system? I mentioned the idea to a few people, and they all thought I was losing it. “No way that’s going to work,” “Do you think the banks haven’t thought of this?” And then, out of nowhere, one friend added, “I mean, if my Roomba thinks it’s falling off a cliff when it’s near a slightly steep step, I don’t think AI is quite there yet.” But I’m not one to shy away from a challenge. So, naturally, I had to give it a shot.

The Experiment Begins

I started with a basic voice and video generator. It was good – really good – but not quite there. The audio didn’t sound like me. It was close, like someone doing a decent impersonation, but still clearly off. I tried a few more times, but didn’t matter.

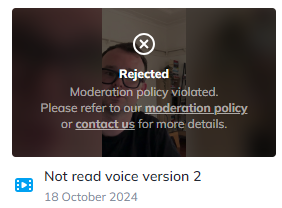

In fact I got rejected when I tried to generate it, so they were trying to stop it before it progressed, but I could still play the audio.

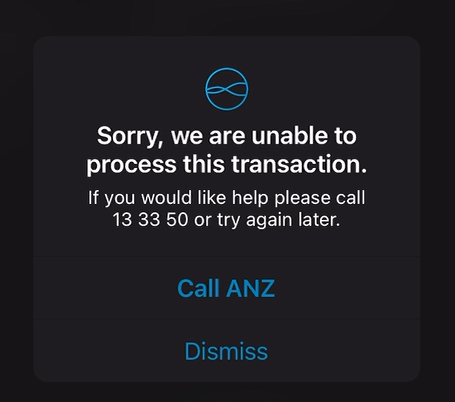

When I just played the audio the bank’s system just didn’t recognise the voice.

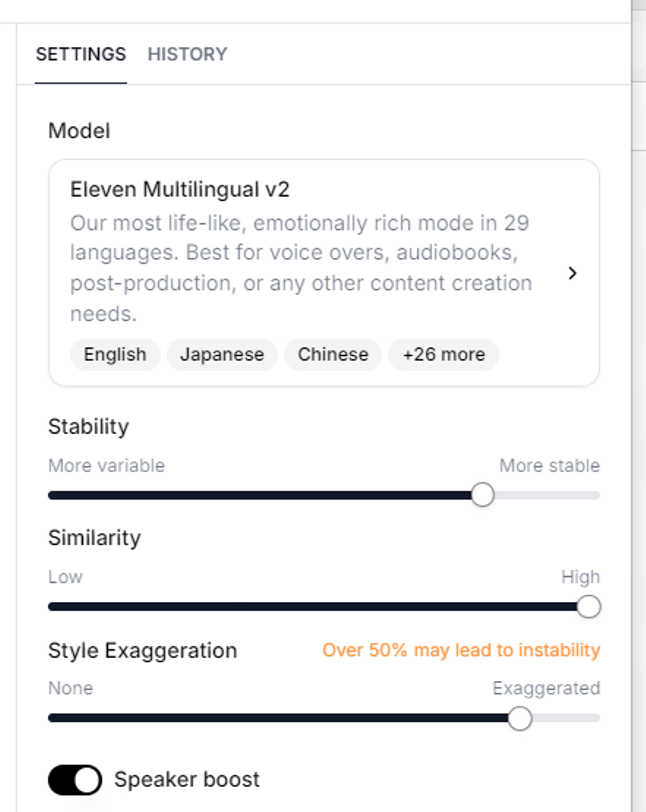

At this point, I figured it was a lost cause. Then I stumbled across a new tool called ElevenLabs, which lets you clone your voice more accurately. I was skeptical, as I figured that all of these tools use the same tooling – and are basically a wrapper over the same service. So no tool is going to be that much better. Curious, I uploaded a 30-second sample of my voice to see what it could do. To my surprise, the results were impressive and like a lot of things in my life. I was wrong. The audio genuinely sounded like me – close enough that I thought I had a shot at fooling the bank. Play the audio at the top of this article to see, cadence is not perfect but for a simple phrase it is pretty good.

Then I uploaded a longer sample, tweaked the model and settings and gave it another go. This time, I still got the same errors when testing, but the voice was significantly better. It was time to get serious. I ditched the laptop speakers and switched to a Bluetooth setup to avoid any background noise. I kept changing the Style Exaggeration, Similarity, and Stability hoping to get it right but with no feedback from the bank at all (which you would expect) I got it to work. Once.

And after what felt like the 50th attempt, something incredible happened.

It worked.

Success… Sort Of

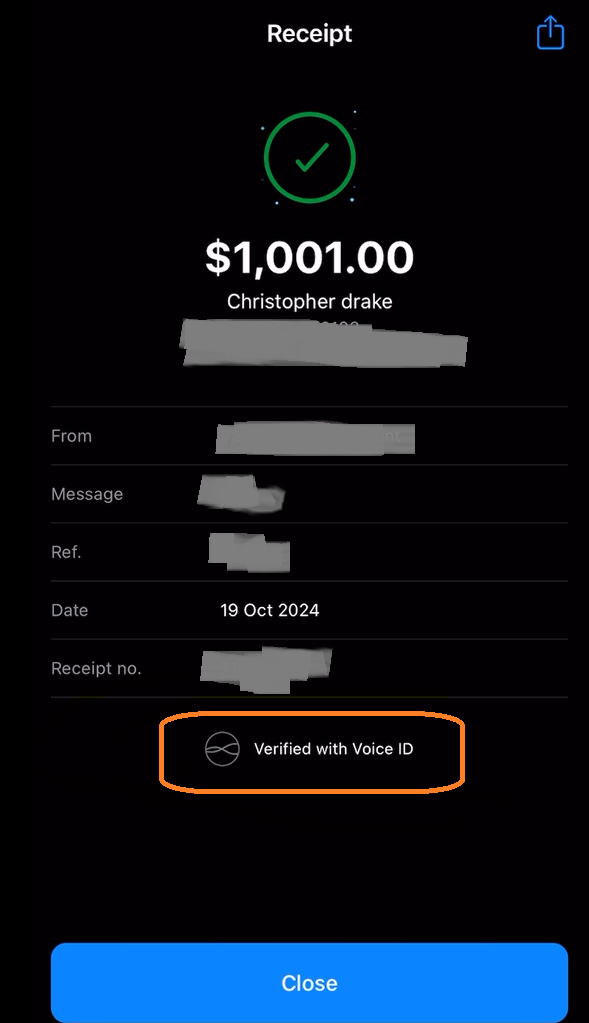

I couldn’t believe it. It had actually worked. The AI-generated voice had fooled the bank’s authentication system. I was so shocked that I’d forgotten to record anything because I was basically giving up and just randomly guessing the settings to adjust. I had a screen recording of the acceptance, but other than that, no video, no separate audio, nothing. But I was confident I could replicate it the next day..

Except… I couldn’t. I tried again. And again. And again. Probably another 100 attempts, but the system wouldn’t fall for it. I even had a camera rolling, documenting each failed attempt, but nothing. It was like hitting a rare glitch in the Matrix – a one-time fluke I couldn’t replicate. I felt like one of those YouTubers doing trick shots; you only see the perfect one, not the thousand failed attempts. Except, in my case, I never sunk the basketball from 201m again.

It’s possible I got lucky, slipping past the bank’s checks on a technicality. But if you’re asking whether I have proof of that one successful attempt, the answer is no. All I’ve got is my word – and a whole lot of failed footage.

So the short you see is the screenrecording and the AI Voice. The screenrecording is real, but I did not record the voice that worked and it got through. I missed it. I was in two minds about whether I should even release this – as it was a fluke, I can’t do it again. Maybe in a few more months, when the technology gets even better, I’ll be able to reproduce it. But right now – I can’t.

The Bigger Picture

As I started thinking more about how these AI models work, I realised something: there’s likely a built-in safeguard – some kind of artefact or anomaly designed to prevent exactly what I was trying to achieve. AI is smart, but it’s not perfect.

There are even tools that claim to “identify if an audio is real or AI-generated with 92% accuracy.” But when you look closer, it’s not as simple as it sounds. That 92% accuracy doesn’t mean 8 out of 100 AI-generated attempts will always pass undetected. It’s more of a sliding scale – the tool gives a probability score, and 92% of the time, it’s correct when it decides something is AI or real. So, while I may have gotten lucky that one time, it’s not a reflection of the overall system being flawed – just that AI is unpredictable, and maybe I slipped through one of those small cracks.

And this is where the real challenge lies. The technology is advancing fast, but it’s not yet foolproof. Even tools designed to detect AI-generated content have their limitations. It’s a reminder that while AI can be impressive, it’s far from perfect.

The Takeaway

So, what does this mean for the future of banking security? To be honest, not a whole lot. The circumstances that had to align for this to work are so rare that it’s almost not worth worrying about. For starters, an attacker would need access to your account, know your passwords, get through multifactor authentication, and be on a device that doesn’t trigger any security flags.

Even then, they’d need a high-quality recording of your voice, run hundreds of attempts like I did, and somehow avoid raising suspicion. It’s like trying to line up holes in hundreds of blocks of Swiss cheese. Sure, it could happen, but the odds are astronomically low.

What did surprise me, though, was how lenient the bank’s system was after all my failed attempts. At no point did they lock me out or force a more stringent authentication process. That’s something I’d expect them to tighten up – after a few failed attempts, it should ask for additional verification or re-authentication. But hey, I’m no cybersecurity expert; I’m just an automation enthusiast who decided to see if AI could break the system.

At the end of the day, this experiment wasn’t about hacking the bank or undermining their security. It was about pushing the boundaries of what this technology could do – seeing if AI could fool a system that’s meant to identify us by something as unique as our voice.

It worked once, but that doesn’t mean it’s a real threat to anyone’s security. If anything, it’s a reminder that while AI is advancing quickly, it’s still got its limitations. And when it comes to the safety of our personal information, those safeguards – imperfect as they may be – are still doing their job.

Just FYI, before publishing this I did submit this to the team at my Bank. I did tell them I couldn’t replicate it and it has been passed to the Customer Authentication team for further investigation.

If you enjoyed this article, there are many more to come in written and video form.

Subscribe to my revamped YouTube Channel

Or Join the Mailing List, to be alerted with new automation news and articles.